Microsoft Research has just dropped its own version of a heavy metal quartet, tools that will power up the future of AI compilation.

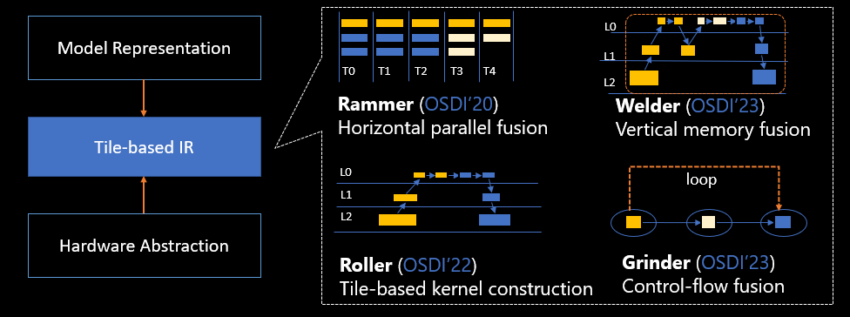

These four AI compilers, aptly nicknamed “Roller,” “Welder,” “Grinder,” and “Rammer” intend to redefine the way we think about computation efficiency, memory usage, and control flow within AI models.

Roller, the first of these, seeks to disrupt the status quo in AI model compilation, which often takes days or weeks to complete. The system reimagines the process of data partitioning within accelerators. Roller functions like a road roller, meticulously placing high-dimensional tensor data onto two-dimensional memory, akin to tiling a floor.

Interested in learning more about Microsoft’s AI chatbot, Bing? Click here.

The compiler ensures faster compilation with good computation efficiency, focusing on how to best use available memory. Recent evaluations suggest that Roller can generate highly optimized kernels in seconds, outperforming existing compilers by three orders of magnitude.

Welder takes aim at the memory efficiency issue inherent in modern Deep Neural Network (DNN) models. The compiler is designed to remedy the misalignment between computing cores’ utilization and saturated memory bandwidth.

Utilizing a technique analogous to assembly line production, Welder “welds” different stages of the computational process together. This reduces unnecessary data transfers, thereby significantly enhancing memory access efficiency.

Tests on NVIDIA and AMD GPUs indicate that Welder’s performance surpasses mainstream frameworks, with speedups reaching 21.4 times compared to PyTorch.

Grinder Speeds Up Processes by 8x

Grinder focuses on another crucial aspect – efficient control flow execution. In layman’s terms, it aims to make AI models smarter in determining what to execute and when. By “grinding” control flow into data flow, Grinder enhances the overall efficiency of models with more complex decision-making pathways.

Want to become more productive? Our Learn team gives a rundown of the 18 best tools here.

Experimental data shows that Grinder achieves up to an 8.2x speedup on control flow-intensive DNN models, outperforming existing frameworks.

Finally, Rammer works on maximizing hardware parallelism. This refers to the capacity of hardware to do different things simultaneously.

This heavy metal quartet of Microsoft AI compilers is built on a common abstraction and unified intermediate representation, forming a comprehensive set of solutions for tackling parallelism, compilation efficiency, memory, and control flow.

Jilong Xue, Principal Researcher at Microsoft Research Asia, said:

“The AI compilers we developed have demonstrated a substantial improvement in AI compilation efficiency, thereby facilitating the training and deployment of AI models.”

Leave a Reply